TLDR;

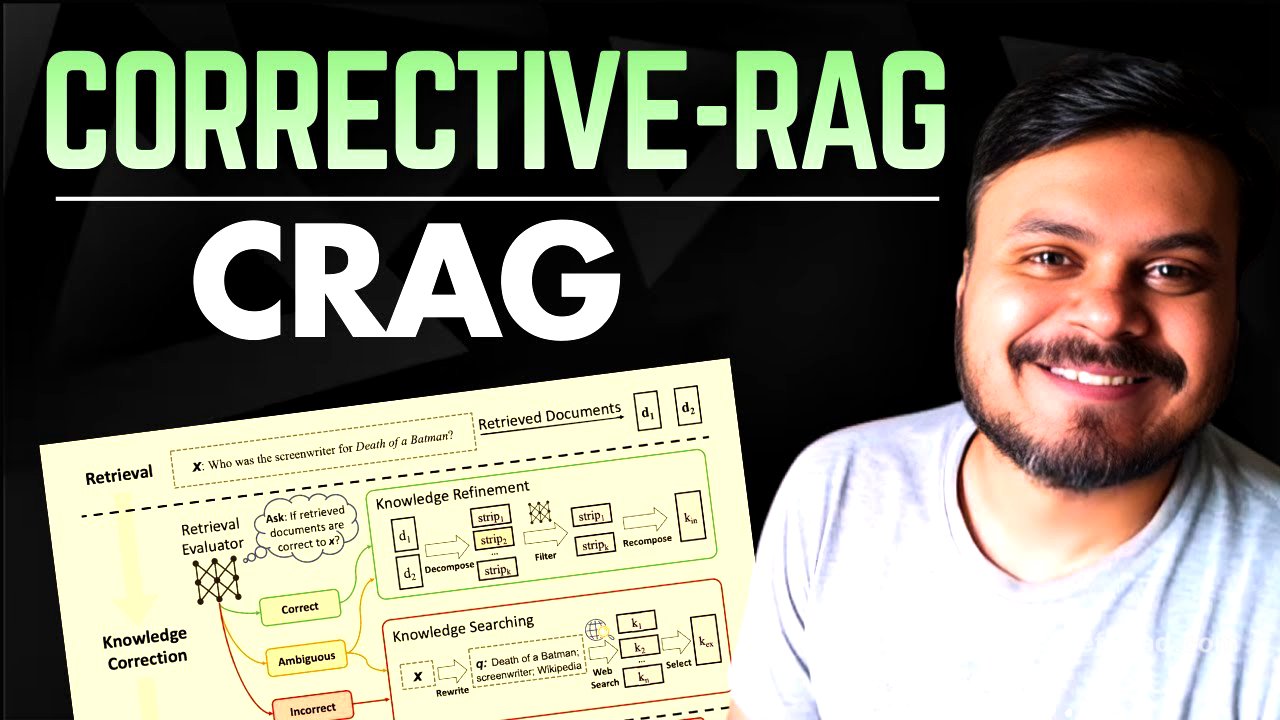

This video is all about Corrective RAG (CRAG), a variant of the traditional RAG (Retrieval-Augmented Generation) model, and how to implement it using LangGraph. It addresses the "blind trust" issue in traditional RAG where the LLM blindly trusts retrieved documents, even if they are irrelevant, leading to incorrect answers. The video explains the CRAG architecture, which includes a retrieval evaluator to determine the relevance of retrieved documents and integrates web search for better results. The implementation is built step-by-step, starting from a basic RAG and adding features like knowledge refinement, retrieval evaluation, and web search integration.

- CRAG addresses the "blind trust" issue in traditional RAG.

- It uses a retrieval evaluator to check the relevance of retrieved documents.

- Web search is integrated to handle irrelevant or ambiguous retrievals.

- The implementation is built step-by-step using LangGraph.

What is Corrective RAG (CRAG)? [0:00]

The video introduces Corrective RAG (CRAG) as an enhanced version of the traditional RAG model. The presenter, Nitesh, explains that the video will cover both the conceptual understanding of CRAG and its practical implementation using LangGraph. The primary motivation for CRAG is to address the limitations of traditional RAG, particularly when the retrieved documents are not relevant to the user's query. To fully appreciate CRAG, one should have a basic understanding of how traditional RAG works, which is covered in the presenter's previous videos on LangChain.

The problem with Traditional RAG: "Blind Trust" & Hallucinations [1:12]

Traditional RAG involves a workflow where a user's query is converted into a vector using an embedding model. This vector is then used to perform a semantic search in a vector database containing private documents stored as vectors. The retrieved documents and the original query are fed to a Large Language Model (LLM) with a prompt, instructing the LLM to generate an answer based on the provided documents. The key problem is that the LLM blindly trusts the retrieved documents. If the retrieved documents are irrelevant, the LLM is forced to generate an incorrect answer based on those documents. This can lead to significant issues, especially in business scenarios where accurate information is crucial.

Visualising the Vector Database & Retrieval Workflow [2:00]

The presenter explains the process of converting a user query into a vector using an embedding model. This vector is then used to perform a semantic search in a vector database. The vector database stores private documents in the form of vectors. The goal is to find the vectors in the database that are closest to the query vector. This step is called retrieval, and it results in a set of documents that should contain information relevant to the query.

Practical Example: When LLMs fail on "Out of Distribution" queries [4:22]

To illustrate the problem, the presenter provides a practical example. If a user asks "What is an LLM?" and the vector database only contains books on machine learning, the retrieved documents will likely be irrelevant (e.g., random forest documentation). The LLM, trusting these irrelevant documents, will generate an incorrect answer. This issue can have serious implications in business settings, where employees might rely on incorrect information for decision-making. Corrective RAG aims to solve this problem by not blindly trusting the retrieved documents.

Code Walkthrough: Loading ML books and creating a basic Retriever [7:04]

The presenter walks through the code setup, which involves loading three machine learning and deep learning books into a folder. The goal is to create a RAG chatbot based on these books. The code walkthrough is essential because the subsequent CRAG implementation will be built upon this foundation. The code includes importing necessary libraries, loading the documents, splitting the text into chunks using RecursiveCharacterTextSplitter, and handling encoding issues to avoid errors.

Testing the Baseline: Bias-Variance Tradeoff vs. Recent AI News [10:17]

The code walkthrough continues with creating embeddings using OpenAI's embedding model and using a FAISS database for storing the vectors. A retriever is created to perform similarity searches and retrieve the top four documents for a given query. The presenter then sets up a LangGraph state with components for the question, retrieved documents, and the answer generated by the LLM. The graph structure is simple, consisting of a retrieval node and a generation node. The presenter tests the setup with the question "What is bias-variance tradeoff?" which is expected to be answered correctly since it's covered in the books.

Identifying Hallucinations in the Transformer architecture query [13:51]

The presenter demonstrates a scenario where the traditional RAG system fails. When asked "What is a transformer in deep learning?", the system provides an answer, but the retrieved documents do not contain any information about transformer architecture. The answer is generated from the LLM's parametric knowledge, which can lead to hallucinations if the LLM lacks specific knowledge. This highlights the problem with traditional RAG: it relies on the LLM's internal knowledge when relevant documents are not found, increasing the risk of generating incorrect or hallucinated information.

Deep Dive: The CRAG Research Paper & Proposed Architecture [15:53]

The video transitions to discussing Corrective RAG (CRAG) and how it addresses the problems of traditional RAG. In CRAG, after retrieving documents, a retrieval evaluator is introduced to assess the usefulness of the retrieved documents for answering the query. The retrieval evaluator determines if the documents are relevant, irrelevant, or ambiguous. Based on this assessment, CRAG takes different actions. If the documents are relevant, it proceeds like a normal RAG. If irrelevant, it uses external knowledge sources like web search. If ambiguous, it combines both internal and external knowledge.

The 3 Retrieval Cases: Correct, Incorrect, and Ambiguous [17:20]

The presenter elaborates on the three cases handled by CRAG: correct, incorrect, and ambiguous retrievals. If the retrieved documents are relevant (correct), the process continues like traditional RAG. If the documents are irrelevant (incorrect), CRAG uses external knowledge sources like web search to find relevant information. If the documents are partially relevant (ambiguous), CRAG combines the relevant parts of the retrieved documents with information from web search to generate an answer. The key difference is that CRAG does not blindly trust the retrieved documents and uses a retrieval evaluator to make informed decisions.

Retrieval Evaluator: Refining Internal vs. External Knowledge [21:01]

The presenter refers to the original CRAG paper, emphasizing the architecture used by the researchers. The paper highlights the use of a retrieval evaluator to determine the relevance of retrieved documents. The evaluator assesses whether the documents (d1, d2) are suitable for answering the question (x). If the documents are correct, they are refined to create "knowledge internal." If incorrect, a web search is performed to obtain "knowledge external." If partially correct, both internal and external knowledge are combined to generate the answer. The presenter aims to replicate this architecture using LangGraph, adding complexity step by step.

Iteration 1: Knowledge Refinement (Decomposition & Filtration) [23:02]

The first iteration involves adding a knowledge refinement feature to the traditional RAG system. This feature refines the retrieved documents to improve the quality of the generated answer. Knowledge refinement is applied when the retrieved documents are deemed relevant. The process involves three steps: decomposition, filtration, and recomposition. Decomposition involves breaking down the document into smaller units (sentences or short phrases). Filtration involves using a model to determine the relevance of each unit to the query. Recomposition involves merging the relevant units back into a refined document.

Code: Building the Refined Value Node with Sentence Strips [30:11]

The presenter walks through the code for implementing knowledge refinement. The code is based on the previous traditional RAG setup, with the addition of a new node for refinement. The state is updated to include "strips" (individual sentences), "kept_strips" (relevant sentences after filtering), and "refined_context" (the combined relevant sentences). A function is created to decompose the documents into sentences. A prompt is designed for the LLM to act as a strict relevance filter, determining whether each sentence directly helps answer the question. The LLM outputs a true/false value for each sentence.

Iteration 2: Retrieval Evaluation (Thresholding Logic) [35:40]

The second improvement involves adding retrieval evaluation. The goal is to determine the quality of the retrieved documents and decide whether they are sufficient to answer the query. This is done using an LLM as a retrieval evaluator. The LLM assigns a relevance score between 0 and 1 to each retrieved document, indicating how well the document answers the query. Lower and upper thresholds (0.3 and 0.7) are defined. If at least one document's score is above the upper threshold, the retrieval is considered "correct." If no document's score is above the lower threshold, it's "incorrect." Otherwise, it's "ambiguous."

Code: Implementing the Evaluation Node & Pydantic Schema [45:36]

The presenter explains the code implementation for retrieval evaluation. A Pydantic schema is created to structure the output from the LLM, including a score and a reason for the score. A system prompt is designed to instruct the LLM to act as a strict retrieval evaluator, providing a relevance score between 0 and 1 for each document. The evaluation node iterates through the retrieved documents, sends each document to the LLM for evaluation, and stores the scores and reasons. Based on the scores and the defined thresholds, the node determines whether the retrieval is correct, incorrect, or ambiguous.

Testing the Evaluator: Correct vs. Incorrect Verdicts [51:40]

The presenter tests the retrieval evaluator with different queries. For a query like "bias variance trade off," which is well-covered in the books, the evaluator correctly identifies the retrieval as "correct." For a query like "AI news from last week," which is not covered in the books, the evaluator identifies the retrieval as "incorrect." A mixed query results in an "ambiguous" verdict. The presenter emphasizes that the system can now evaluate the quality of the retrieval and provide a verdict on whether the retrieved documents are sufficient.

Iteration 3: Web Search Integration using Tavily [53:42]

The third iteration adds web search integration using the Tavily API. If the retrieval evaluator determines that the retrieved documents are "incorrect," the system will now perform a web search to find relevant information. The philosophy is to provide the user with a correct result, even if the internal documents are insufficient. The web search results are then used to generate the answer. The presenter notes that the web search results also need to be filtered to ensure they are relevant.

Iteration 4: Query Rewriting for Better Search Results [1:00:43]

The next improvement is query rewriting. Before performing a web search, the original user query is rewritten to be more suitable for a search engine. User queries can be vague or lack specific keywords, leading to poor search results. Rewriting the query involves using an LLM to generate a better search query that is clear, specific, and keyword-rich. This rewritten query is then used to perform the web search, resulting in more relevant and accurate results.

Handling Ambiguous Knowledge: Merging Internal & External Context [1:07:18]

The final piece of the architecture is handling ambiguous cases. In these cases, the retrieved documents are partially relevant but not sufficient to fully answer the query. The approach is to combine the relevant parts of the retrieved documents (good_docs) with information obtained from web search (web_docs). The refinement process then operates on both the internal and external knowledge to create a combined context for generation. This ensures that the generated answer leverages both the available internal knowledge and the additional information from the web.

Conclusion: Comparing our implementation to the Original Paper [1:14:19]

The presenter concludes by summarizing the implemented CRAG architecture. The system can now perform retrieval, evaluate the quality of the retrieved documents, and handle correct, incorrect, and ambiguous cases. Correct cases use knowledge refinement and internal knowledge. Incorrect cases use web search and external knowledge. Ambiguous cases combine both. The presenter acknowledges that some aspects of the original paper, such as using a T5 transformer, were not implemented due to resource constraints. However, the core architecture and functionality of CRAG have been successfully replicated.