TLDR;

This video provides a foundational overview of designing a scalable social media platform capable of handling millions of users. It covers essential concepts such as distributed systems, load balancing, caching strategies, database choices (SQL vs. NoSQL), indexing, and data partitioning. The video highlights the importance of scalability, reliability, availability, and efficiency in system design, while also addressing the challenges and trade-offs involved in creating a robust and responsive platform.

- Distributed systems are crucial for handling growing user demands.

- Load balancing ensures efficient distribution of incoming requests across multiple servers.

- Caching improves data retrieval speed, but requires careful validation strategies.

- Database choice (SQL vs. NoSQL) depends on data structure, scalability needs, and consistency requirements.

- Indexing speeds up search operations but can slow down write operations.

- Data partitioning enhances performance and availability by breaking large databases into smaller parts.

Introduction to Scalable System Design [0:00]

The video addresses the challenge of designing a social media platform that can handle millions of user requests. It begins by noting that a simple web server and single database setup is insufficient for scaling to meet the demands of a large user base. Distributed systems, defined as networks of independent computers working as a single coherent system, are presented as the solution.

Key Characteristics of Distributed Systems [0:24]

The key characteristics of distributed systems are scalability, reliability, availability, and efficiency. Scalability refers to the system's ability to handle growing demands through horizontal (adding more servers) or vertical (upgrading hardware) scaling. Reliability ensures the system functions correctly even when components fail. Availability is the percentage of time a system remains operational, often expressed in nines (e.g., 99.9% availability means 8.76 hours of downtime per year). Efficiency is measured by latency (delay in getting the first response) and throughput (number of operations handled in a given time). The CAP theorem states that a distributed system can only guarantee two out of three properties: consistency, availability, and partition tolerance.

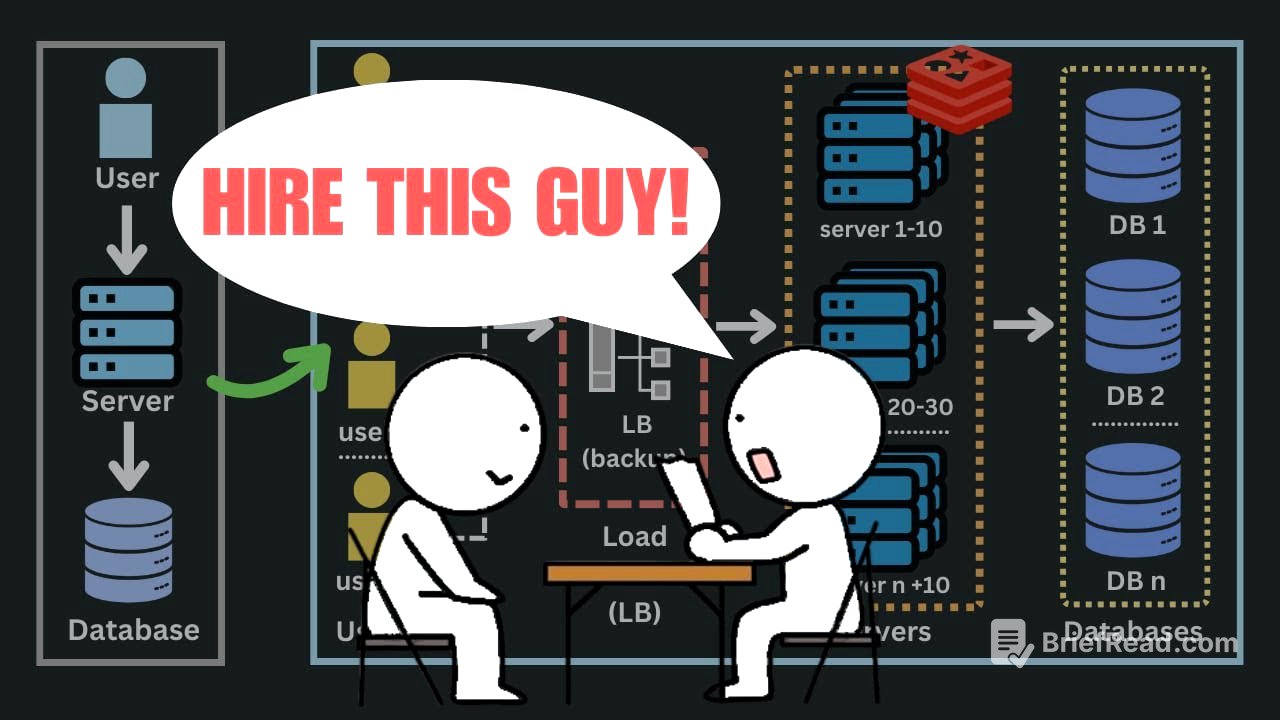

Load Balancing [1:52]

To manage load efficiently in a distributed system, a load balancer is needed to distribute incoming requests across multiple servers, preventing any single server from being overwhelmed. If a server fails, the load balancer redirects traffic to healthy servers. Load balancers can be placed at various levels, such as between users and web servers, web servers and application servers, and application servers and databases. Common load balancing algorithms include least connection method, round robin, and IP hash. To prevent the load balancer itself from becoming a single point of failure, a standby load balancer can be added to take over if the primary one fails.

Caching Strategies [2:57]

Caching is introduced to address the issue of servers frequently requesting the same data from the database. Caching leverages the principle that recently requested data is likely to be requested again, and retrieving data from cache is faster than from the database. Content Delivery Networks (CDNs) are used for caching static media closer to the user to reduce latency. Caching challenges include maintaining data consistency and ensuring data is synchronized with the source of truth. Cache validation strategies include write-through (data written to both cache and storage simultaneously), write-around (data bypasses cache and goes directly to storage), and write-back (data written to cache first, then to storage later). Cache eviction policies, such as least recently used (LRU), first-in-first-out (FIFO), and least frequently used (LFU), are used to make room for new data when the cache reaches capacity.

SQL vs NoSQL Databases [4:26]

The video discusses storage strategies, comparing SQL and NoSQL databases. SQL databases store data in tables with predefined schemas, while NoSQL databases offer more flexible data structures. NoSQL databases come in four main types: key-value stores, document databases, wide-column stores, and graph databases. SQL databases have a rigid schema and use SQL for querying, while NoSQL databases have a flexible schema and use collection-focused queries. SQL typically scales vertically (though horizontal scaling via sharding is possible), while NoSQL scales horizontally. SQL is ACID compliant, while NoSQL often sacrifices this for performance and scalability. ACID properties include atomicity, consistency, isolation, and durability. SQL is suitable for applications needing ACID compliance and when data structures are stable, while NoSQL is suitable for large volumes of unstructured data and rapid development requiring flexibility.

Indexing [6:18]

Indexing is introduced as a solution to slow query performance. Indexes create separate data structures that point to the location of actual data, speeding up search operations. Common types of indexes include primary key indexes, secondary indexes, and composite indexes. Foreign keys are constraints that enforce relationships between columns in different tables. While indexes improve read performance, they can slow down write operations because the index must be updated with each data modification.

Data Partitioning [7:22]

Data partitioning is presented as a technique for breaking large databases into smaller, more manageable parts to improve performance, availability, and load balancing. Partitioning methods include horizontal partitioning (dividing rows across multiple databases), vertical partitioning (separating features or columns into different databases), and directory-based partitioning (using a lookup service to abstract the partitioning scheme). Partitioning can be based on various criteria, such as key or hash-based partitioning (using a hash function to determine the partition), list partitioning (assigning each partition a list of values), round robin (distributing data evenly across partitions), and composite partitioning (combining multiple methods). Consistent hashing minimizes data redistribution when scaling the number of servers. Challenges of partitioning include difficulty in joining across multiple partitions and data rebalancing.