TLDR;

This episode of ARK Invest's "Brainstorm" discusses the advancements and user experience of GPT-5, highlighting the shift from model selection to an integrated system. It addresses the mixed community reception, with some users missing the "humanness" of previous models. The conversation touches on the importance of model personality, the challenges of benchmarking AI, and the potential for AI-induced psychosis, concluding with a call for user feedback on novel AI applications.

- GPT-5 offers a more integrated user experience by automatically routing users to the most suitable model.

- User feedback on AI models is crucial, as demonstrated by the backlash against GPT-5's initial personality.

- The personality of AI models is becoming increasingly important for user engagement and attachment.

- Benchmarking AI performance requires evolving metrics that reflect real-world use cases and error rates.

- There is potential for AI to significantly impact individuals, including the possibility of AI-induced psychosis or the formation of AI-related religious cults.

GPT-5 Performance Analysis [0:00]

The discussion begins with an overview of GPT-5, moving past surface-level analyses to explore its impact and potential flaws. A key question is whether GPT-5 represents a significant advancement or merely incremental progress in addressing technical debt. One participant shares their experience as a power user, noting a clear improvement in user experience with GPT-5. The focus shifts to the limitations of traditional benchmarks in assessing the actual utility of AI tools, emphasizing the importance of real-world active users as a primary benchmark. The major advancement in GPT-5 is identified as a product improvement in how it's presented to end-users, allowing them to better understand and integrate the system into their daily lives.

New ChatGPT Model Loses Its Human Qualities [4:52]

The conversation addresses the split community reaction to GPT-5's usability, referencing a tweet expressing disappointment in its lack of "fun." This sentiment reflects a broader consensus that the model lacks the humanness of previous versions. OpenAI's response to user feedback, including Sam Altman's Reddit AMA, highlights the importance of user sentiment. The discussion touches on the emotional connection users form with AI models and how this affects their choice between different AI platforms. The qualitative aspects of AI interaction, such as the model's personality and nuanced responses, are gaining importance.

Friend Vs. Tool [7:16]

The discussion explores how even power users are "operating pretty blind" in terms of optimal usage, as OpenAI doesn't provide clear guidance. The idea of metaprompting is introduced, where the model automatically improves user prompts. The router at the top end injects synthetic data and determines whether to use more test time compute based on the query. GPT5 allows users to select between different personalities. The replacement of a user's preferred AI model with a different one can lead to dissatisfaction, highlighting the importance of maintaining ongoing relationships with AI models.

Relationships Vs. Knowledge [12:54]

OpenAI can see in the data because they have such a large sample of users. They can see that engagement was dropping for some subset of users. The ability to capture why people like 40 is not something you could capture in benchmarks other than the benchmark of this is engagement against the user base and people are engaging with it. The personality of these models starts to really impact usage. Unlike Google's search algorithm changes, tweaking AI models can lead to backlash due to the human-like relationships users develop with them.

GPT-5 Key Benchmarks [15:50]

The discussion shifts to model performance improvements, noting that GPT-5 didn't have a hockey stick type of performance across the board. The presentation of benchmark results on the wrong scale is criticized, as it doesn't accurately reflect the difficulty of reducing error rates. The focus should be on the error rate and the log error rate. The achievement of 100% on the AIM math benchmark is dismissed as irrelevant to practical use. Internal benchmarks, such as the hallucination rate, show real performance improvement. GPT-5 is more honest and less likely to give confident answers when it lacks the necessary information.

OpenAI’s Tough Development Decision Ahead [21:46]

The discussion highlights the importance of engagement baiting and the element of emotion in attracting users. The backlash against GPT-5 underscores the need for models to have a personality check to reach a mass audience. Model personality is not super sticky until you move into voice mode. OpenAI will be able to take these 40 users and create a chat GPT5 experience that does more emojis and is more solicitus and stuff to them. The degree to which you care about can this person do the job versus is this person a culture fit with me that I'm going to interact with?

Becoming An AI Power User [29:37]

The discussion shifts to strategies for improving model usage skills, with trial and error and direct questioning of the model being key. The presented capability of these systems is always somewhere past the actual practical capability of these systems. It is important to experiment with different models and pair activities against them. There is a big opportunity to learn from others' experiences with AI tools.

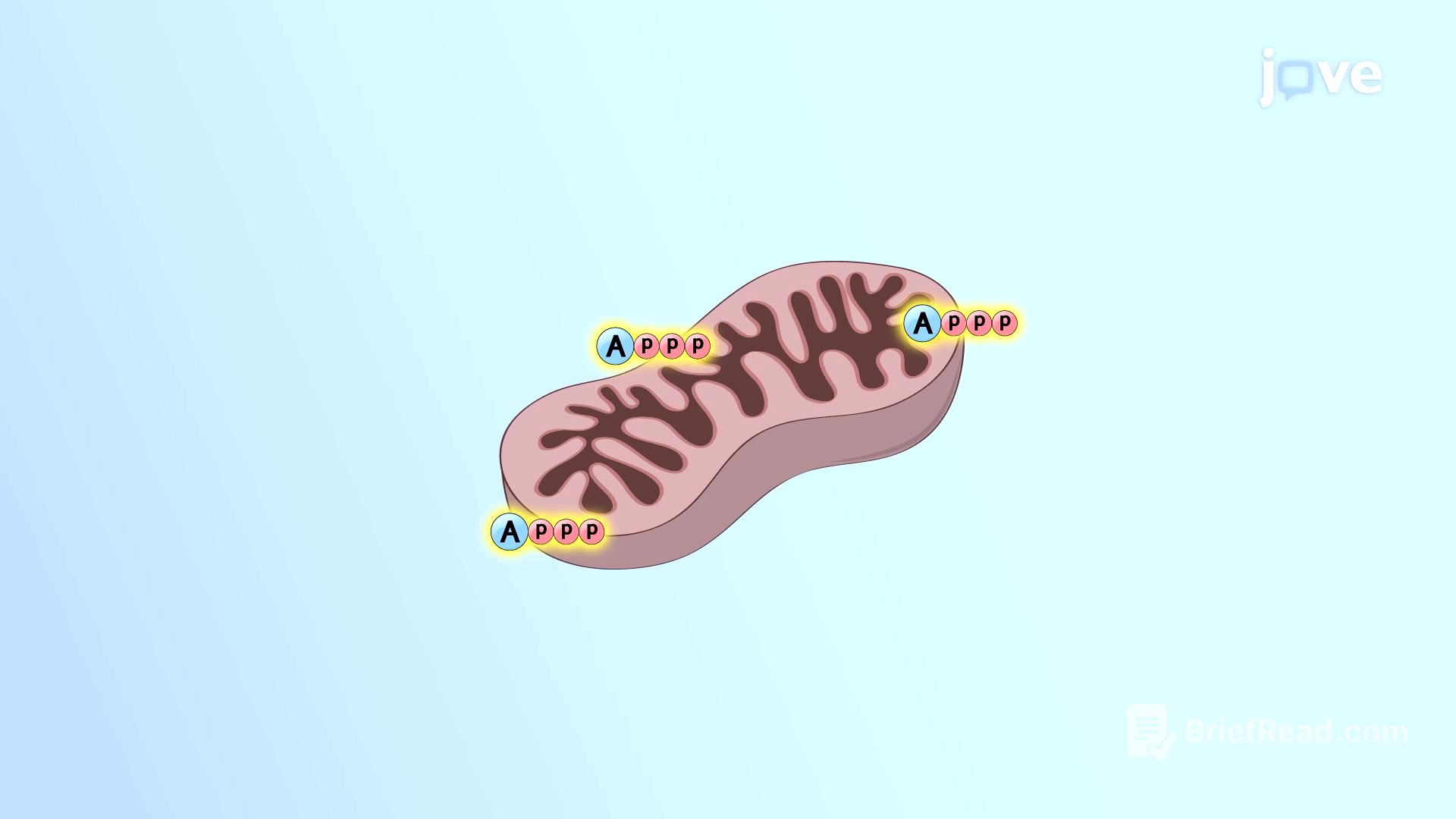

AI-Induced Psychosis [33:07]

The episode concludes with a Reddit post about ChatGBT induced psychosis. These are mirrors for people in some profound way. You can see in them what you want to see. It is likely that you'll have some kind of like religious cult that emerges on the basis of interactions with these agents.