TLDR;

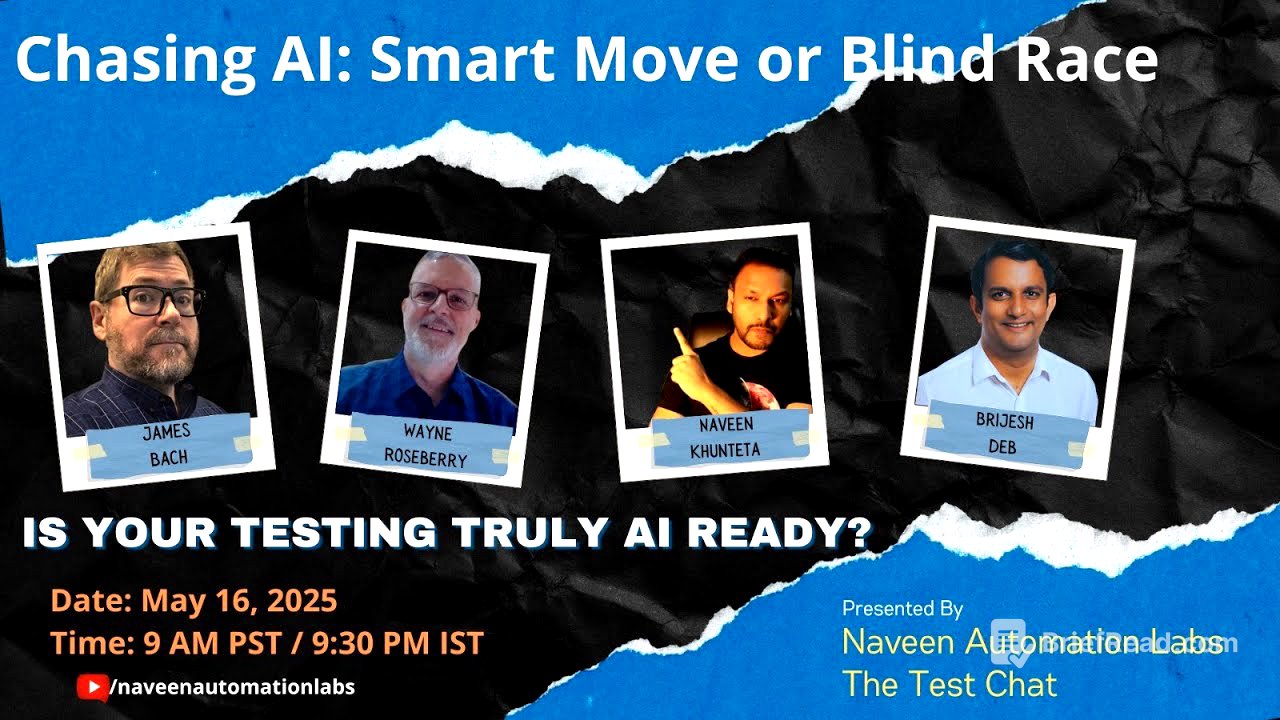

This YouTube video features a panel discussion on the readiness and impact of AI in software testing. The panelists James Bach, Wayne Roseberry, and Naveen Khata, discuss the meaning of being "AI-ready," the potential for AI to replace testers, and the skills testers need to navigate the evolving landscape. They emphasize the importance of critical thinking, continuous learning, and responsible AI implementation, highlighting both the potential benefits and the risks of over-reliance on AI in testing.

- Being AI-ready means understanding AI tools, thinking critically, and being skeptical.

- AI is a tool, and like any tool, it has its strengths and weaknesses.

- Testers should focus on delivering value and demonstrating their unique skills.

Introduction [0:06]

The host introduces the panelists: James Bach, Wayne Roseberry, and Naveen Khata. The discussion focuses on whether testing teams are truly ready for AI and what that readiness entails.

What Does "AI Ready" Mean for a Testing Team? [2:49]

James Bach defines AI readiness as being aware of different AI types, especially large language models (LLMs), and understanding the tools built with them. He stresses the importance of critical thinking and skepticism towards AI outputs, as many answers can be flawed. He likens AI to a "crazy brother-in-law" that testers must learn to live with and use effectively.

AI as a Tool: Benefits and Limitations [6:47]

Wayne Roseberry suggests thinking of AI as a toolset and emphasizes that testers should have a broad toolkit, using each tool for its specific purpose. He cautions against the hype surrounding AI, as LLMs can create convincing but incorrect outputs. He highlights that LLMs predict text statistically rather than thinking or reasoning, and testers must understand this difference to avoid being misled.

Skills and Knowledge for AI in Testing [13:09]

Naveen Khata believes AI in testing requires good skills and tool knowledge. He notes that many people use AI simply because of management or peer pressure, without considering its actual necessity for specific projects. He shares examples of LLMs providing incorrect code and the importance of understanding when to use and when to avoid AI. He suggests AI can be useful for generating synthetic test data for edge cases.

The Danger of Over-Reliance on AI [17:10]

James Bach raises concerns about the danger of people thinking that others are just using AI, which diminishes their perceived skills and value. He compares it to someone presenting a Google search as their own work. Wayne Roseberry counters that skilled testers can use AI to generate better test cases than someone without expertise.

The Importance of Critical Evaluation [19:54]

Wayne Roseberry and James Bach discuss the importance of critical evaluation of AI-generated content. They share examples of AI tools providing incorrect information and the need for testers to verify and corroborate AI results. They emphasize that the burden is on both the deliverer and receiver of information to ensure its accuracy and trustworthiness.

Panelists' Experience with AI [25:13]

The panelists share their backgrounds and experiences with AI. James Bach discusses his programming experience and work with LLMs. Wayne Roseberry talks about building machine learning models and using AI to drive testing at Microsoft Office. Naveen Khata recounts his work with data science teams on a project involving predictive modeling for fleet management.

Building AI Agents for Testing [34:10]

Naveen Khata discusses the use of tools like make.com and n8n.com to generate AI agents for testing. He emphasizes the importance of having a specific use case and providing the right context. He also warns about the potential for bias in AI models, particularly in areas like loan approval and hiring processes.

Risks and Responsibilities in AI Implementation [37:02]

James Bach shares a cautionary tale of a company that incurred a multi-million dollar fine due to a tester's mistake involving sensitive data. He argues against blindly trusting AI agents, especially in critical applications, and stresses the need for human oversight.

The Role of Interactive AI-Assisted Coding [40:01]

Wayne Roseberry highlights the benefits of interactive AI-assisted coding, such as GitHub Copilot, but Naveen Khata raises concerns about its impact on learning for new programmers. James Bach shares an example of a student who "vibecoded" a tool with AI but struggled to fix the bugs.

AI as a Safety Net and Critique Tool [46:25]

James Bach suggests using AI to critique one's own work, acting as a safety net. He shares an example of an AI tool identifying a security vulnerability related to outdated fonts that he would not have otherwise considered.

Reviewing Test Cases with AI [47:57]

James Bach discusses the idea of writing test cases and then asking AI to review them. He acknowledges that this can be a responsible use of AI, but emphasizes the importance of careful review and critical thinking. He shares a personal anecdote of missing a systematic bias in AI-generated test cases.

Prompt Engineering and Contextual Understanding [55:48]

Naveen Khata stresses the importance of prompt engineering and having conversations with AI to provide the right context. He advises against uploading sensitive information to the cloud and suggests hosting LLMs locally for confidential projects.

AI for Summarization and Learning [59:12]

The panelists discuss the usefulness of AI for summarizing long documents and research papers, but caution that the summaries should still be carefully reviewed for accuracy. They agree that AI can be a valuable tool for those who already have expertise in a subject.

Companies' Understanding of AI in Testing [1:00:44]

James Bach expresses skepticism that companies truly understand what they are asking when they say "use AI in testing." He notes that many people make claims about AI's capabilities without considering its reliability. He shares an anecdote of a student pointing out a flaw in his approach to using AI for a decision table.

Iterative Prompting and Community Standards [1:04:35]

The panelists discuss the importance of iterative prompting and "prompt sculpting" to get the best results from LLMs. They emphasize the need to develop community standards and ethics for using AI responsibly.

AI for Test Data Generation [1:09:34]

Wayne Roseberry shares his experience using LLMs for test data generation, specifically for creating fuzzy data for clustering algorithms. He notes that the imprecision and wackiness of LLMs can be perfect for certain types of content generation.

AI Replacing Testing Roles [1:12:48]

Naveen Khata believes AI will not replace testers but will change the way testing is done. He emphasizes the need for human intelligence and the limitations of AI in understanding full context and engaging with stakeholders.

The Real Threat to Testing Jobs [1:15:52]

Wayne Roseberry argues that the real threat to testing jobs is decision-makers who decide they don't need testers. He stresses the importance of testers delivering value and demonstrating their unique skills to justify their roles.

The Impact of Misguided Decisions [1:19:52]

James Bach shares a story of a former client who fired their test team based on the promise of an AI tool, only to rehire them after the tool failed. He emphasizes that the problem is not AI itself, but "idiots" who think they don't need testers because they have AI.

Desperation vs. Innovation [1:27:34]

James Bach suggests that some companies may be turning to AI out of desperation due to financial constraints. He presents two heuristics for working with "magic boxes": if something really matters, don't rely on magic; but if you have no other way to do it, you might as well try magic.

Three Things Testers Need to Be Ready for the AI Wave [1:29:33]

- James Bach: Have examples of AI getting it wrong, stay updated on the latest LLM versions, and ask AI to create programs rather than doing things directly.

- Wayne Roseberry: Remember you are helping someone solve a problem, bring value through critical thinking and analysis, and share learnings with the team.

- Naveen Khata: Raise your voice about the pros and cons of AI, get involved in understanding AI/ML basics, and don't become overly dependent on AI models, continue learning coding.

Conclusion [1:35:57]

The host thanks the panelists and audience for the discussion. She reiterates the importance of critical thinking and announces that the points shared by James Bach will be added to the video description.