TLDR;

Andrej Karpathy discusses the evolving landscape of software development in the age of AI, highlighting the shift from traditional coding (Software 1.0) to neural networks (Software 2.0) and now to programming with Large Language Models (LLMs) using natural language (Software 3.0). He draws parallels between LLMs and utilities, fabs, and operating systems, suggesting that we are in the "1960s" of LLMs, a time ripe with opportunity for innovation. Karpathy emphasizes the importance of understanding the psychology of LLMs, designing applications with partial autonomy, and fostering human-AI collaboration. He also touches on the concept of "vibe coding," where natural language programming makes software development more accessible.

- Software is undergoing a fundamental shift with the advent of LLMs, marking a new era of programming in natural language.

- LLMs possess characteristics of utilities, fabs, and operating systems, indicating a computing landscape reminiscent of the 1960s.

- Understanding the psychology of LLMs is crucial for effective collaboration and application design.

- Designing LLM applications with partial autonomy and focusing on human-AI collaboration loops are key to successful integration.

- The accessibility of programming via natural language ("vibe coding") democratizes software development.

Intro [0:00]

Andrej Karpathy introduces the topic of software in the age of AI, noting that the field is undergoing fundamental changes. He points out that software has not seen such drastic shifts in 70 years, but recently, it has changed rapidly twice. This transformation presents significant opportunities for those entering the industry to write and rewrite software.

Software evolution: From 1.0 to 3.0 [1:25]

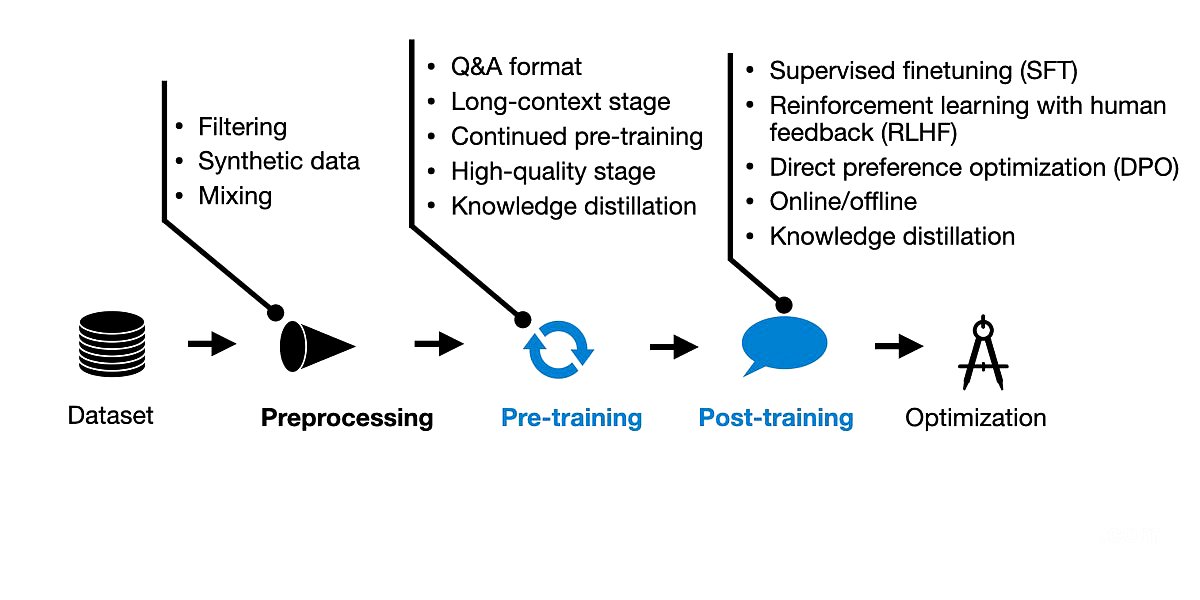

Karpathy describes the evolution of software, starting with Software 1.0, which is the code written for computers. Software 2.0 involves neural networks and their weights, where code is not directly written but rather emerges through training on datasets. He identifies LLMs as a new type of computer, deserving the designation Software 3.0, where prompts written in English serve as programs. He illustrates this with the example of sentiment classification, showing how it can be approached with Python code, neural networks, or LLMs.

Programming in English: Rise of Software 3.0 [4:40]

Karpathy shares an anecdote from his time at Tesla, where he observed the Autopilot system transitioning from C++ code (Software 1.0) to neural networks (Software 2.0). As the neural network's capabilities grew, the C++ code was gradually removed, with the neural network effectively "eating" the original software stack. This illustrates the shift towards new forms of software that erode existing stacks. He emphasizes the importance of being proficient in all three programming paradigms (1.0, 2.0, and 3.0) due to their unique strengths and weaknesses.

LLMs as utilities, fabs, and operating systems [6:10]

Karpathy draws parallels between LLMs and utilities, fabs, and operating systems. LLM labs invest heavily in training LLMs, similar to building an electrical grid, and provide access through APIs with metered access. He notes that LLMs have properties of utilities, with labs like OpenAI and Google providing intelligence through APIs, and also properties of fabs due to the high capital expenditure required to create them. He likens LLMs to operating systems, highlighting the emergence of complex software ecosystems.

The new LLM OS and historical computing analogies [11:04]

Karpathy compares LLMs to operating systems, with context windows acting as memory and LLMs managing computation. He draws an analogy to the 1960s, where computing was expensive and centralized, leading to time-sharing systems. He suggests that we are in a similar era with LLMs, where computing is still costly and primarily cloud-based. He also notes that interacting with LLMs directly via text feels like communicating with an operating system through a terminal.

Psychology of LLMs: People spirits and cognitive quirks [14:39]

Karpathy discusses the psychology of LLMs, describing them as "people spirits" or stochastic simulations of people. He notes that LLMs possess encyclopedic knowledge and memory, akin to the character in "Rain Man," but also exhibit cognitive deficits such as hallucinations and uneven intelligence. He also points out that LLMs suffer from "entrogade amnesia," lacking the ability to retain and consolidate knowledge over time. He stresses the importance of considering these limitations when working with LLMs.

Designing LLM apps with partial autonomy [18:22]

Karpathy explores the design of LLM applications with partial autonomy, using Cursor as an example. He highlights the importance of application-specific GUIs, which allow humans to oversee the work of these unreliable systems and move faster. He introduces the concept of an "autonomy slider," where users can adjust the level of autonomy granted to the LLM based on the complexity of the task.

The importance of human-AI collaboration loops [23:40]

Karpathy emphasizes the importance of human-AI collaboration loops, where AI handles generation and humans focus on verification. He suggests that speeding up the verification process and keeping AI "on a leash" are crucial for effective collaboration. He advocates for smaller, incremental changes and rapid iteration to ensure quality and safety.

Lessons from Tesla Autopilot & autonomy sliders [26:00]

Drawing from his experience with Tesla Autopilot, Karpathy reiterates the concept of partial autonomy and the use of autonomy sliders. He shares a story about his first experience with a self-driving car in 2013, highlighting the challenges and complexities of achieving full autonomy. He cautions against overhyping the progress of AI agents and emphasizes the need for human oversight.

The Iron Man analogy: Augmentation vs. agents [27:52]

Karpathy uses the analogy of the Iron Man suit to illustrate the difference between augmentation and agents. He suggests that, at this stage, it is better to focus on building "Iron Man suits" that augment human capabilities rather than fully autonomous "Iron Man robots." He emphasizes the importance of creating products with custom interfaces and rapid human-in-the-loop verification.

Vibe Coding: Everyone is now a programmer [29:06]

Karpathy discusses the accessibility of programming with LLMs, noting that natural language interfaces make everyone a programmer. He references the concept of "vibe coding," where programming is more intuitive and accessible. He shares examples of his own "vibe coding" projects, including an iOS app and Menu Genen, highlighting the ease with which he was able to create these applications.

Building for agents: Future-ready digital infrastructure [33:39]

Karpathy explores the idea of building digital infrastructure specifically for AI agents. He suggests creating lm.txt files to provide LLMs with information about a domain, similar to robots.txt for web crawlers. He also notes the importance of providing documentation in formats that are easily readable by LLMs, such as Markdown. He highlights examples of services like Versell and Stripe that are pioneering this approach.

Summary: We’re in the 1960s of LLMs — time to build [38:14]

Karpathy concludes by summarizing the key points of his presentation. He reiterates that we are in the "1960s" of LLMs, a time of great opportunity for innovation. He emphasizes the importance of understanding the psychology of LLMs, adapting infrastructure to meet their needs, and building applications with partial autonomy. He encourages the audience to embrace this new era of software development and build the future together.