TLDR;

This video summarizes the key trends and changes in AI-assisted coding in 2025, based on insights from industry experts like Simon Willison and Peter. It covers the rise of reasoning models, coding agents, and the shift towards "vibe coding," where AI tools generate a significant portion of code. The video also discusses the increasing capabilities of AI in handling longer tasks, the normalization of risky AI practices, and the competitive landscape of AI models, with OpenAI losing its lead to other players.

- Reasoning models became standard, enabling AI to plan and execute multi-step tasks.

- Coding agents like Claude Code emerged, allowing AI to write, execute, and iterate on code.

- "Vibe coding" and "vibe engineering" are new approaches where developers rely on AI for code generation and assistance.

- AI models can now handle tasks that previously took humans hours, significantly increasing developer output.

- The AI landscape is becoming more competitive, with Chinese models and other players challenging OpenAI's dominance.

Intro [0:00]

The year 2025 was transformative for AI-assisted coding due to smarter models and advancements in reasoning and agent technologies. Tools like cloud code and open code are now integrated into developers' terminals, leading to a significant reduction in time spent in code editors. This shift has changed how the industry codes, with transitions happening more rapidly than before.

Blacksmith Ad [1:29]

Blacksmith is a tool designed to improve CI build times, reducing costs by 75% through faster cache downloads, enhanced hardware, and rapid Docker builds. It offers observability features like action search and failure analysis, increasing confidence in PR outcomes by identifying flaky tests. Companies like Superbase and Clerk have adopted Blacksmith for its reliability and efficiency.

The Year of Reasoning [3:44]

Reasoning models marked a significant shift in AI, allowing models to create their own context and iterate on ideas before reaching conclusions. Deepseek's R1 model played a crucial role in popularizing reasoning, as it allowed users to see how the process worked. This approach has become standard, with even skeptical entities like Anthropic adopting it. Reinforcement learning with verifiable rewards has driven capability progress, leading to more effort being put into adjusting models post-training.

The Year of Agents [8:21]

Agents, defined as LLMs running in a loop to achieve goals by using tools, have become useful in coding and search. Claude Code is a prominent example of coding agents, capable of writing, executing, and iterating on code. All major labs have released their own coding CLIs. Anthropic built Claude Code around the capabilities they anticipated models would have in the future, which proved successful as models improved.

LLMs on the Command Line [15:06]

LLMs have found their way to the command line, with tools like Claude Codecs making the CLI more accessible to developers. The ability of LLMs to generate terminal commands with obscure syntax has lowered the barrier to entry. Developers are now more comfortable configuring their CLIs, leading to increased usage and customization.

The Year of YOLO and the Normalization of Deviance [16:49]

The "YOLO" (you only live once) approach, where users trust AI tools to run without safety measures, has become common. This normalization of deviance, where risky behavior is accepted due to repeated exposure without negative consequences, poses security risks. The longer these systems run insecurely, the closer the industry gets to a potential disaster.

The Year of the $200 a Month Sub [19:18]

The normalization of $200 a month subscriptions for AI services has become a trend, driven by users who heavily utilize these models. These subscriptions enable high-inference users to do significant work, subsidized by other subscriptions and API usage. However, this model creates challenges for businesses that rely on these models, as they face higher costs and struggle to compete with subsidized pricing.

The Year of Chinese Models [23:02]

Chinese AI labs have made significant progress, with models like GLM 4.7 and Deepseek 3.2 performing well. These open-weight models are competitive, with some outperforming non-Chinese models in certain benchmarks. The rise of Chinese models provides an alternative to Western AI offerings.

Long Tasks [24:08]

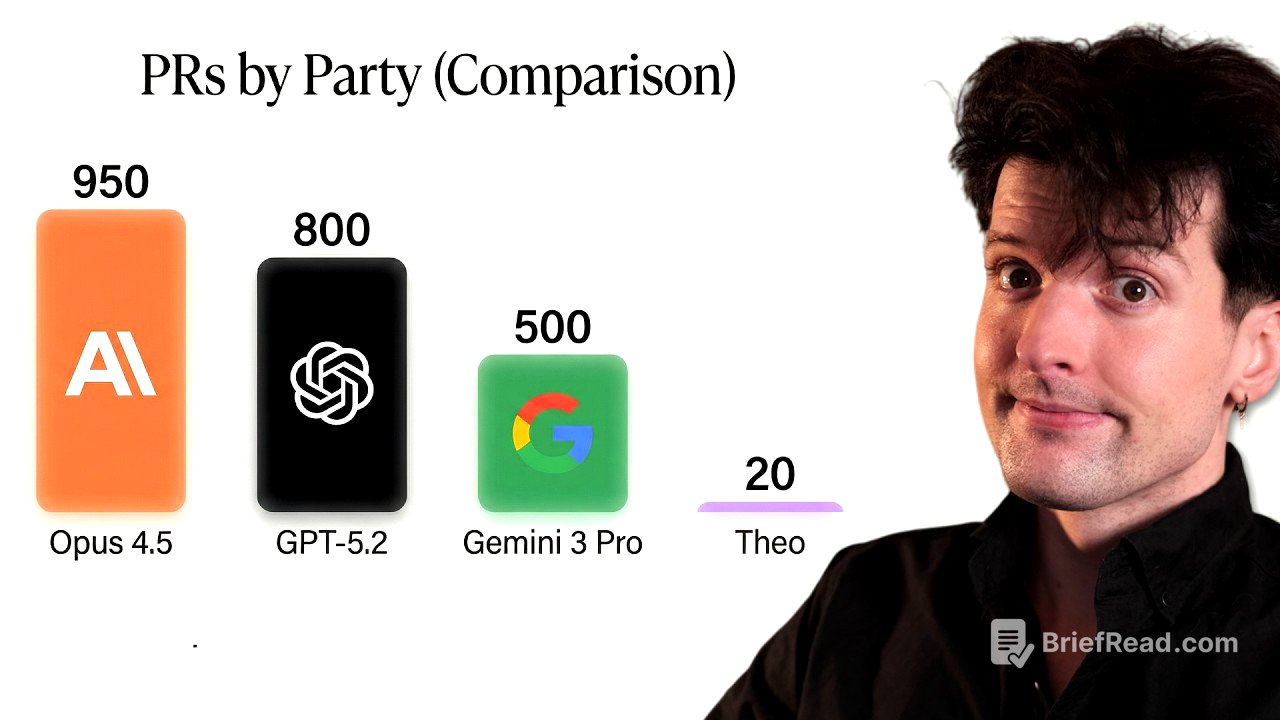

AI models can now handle longer tasks, with Opus capable of completing tasks that would take a human five hours with a 50% or higher success rate. This increase in task length has led to larger pull requests and higher lines of code per developer. The length of tasks AI can do is doubling every seven months, significantly increasing developer output.

The Year OpenAI Lost Their Lead [27:35]

OpenAI has fallen into second place in many areas, prompting an internal "code red." The adoption of Anthropic's SDK has grown significantly, narrowing the gap with OpenAI's SDK. Gemini is capable and poses a threat, especially in bulk data processing and image generation.

The Majority of the Code We Write is No Longer Written by Hand [30:29]

The majority of code is now generated by AI, leading to a significant increase in the amount of code produced. Tools like Cloud Code have made it easier to build useful applications, resulting in developers publishing more code than ever before. Simon Willison built 110 tools, showcasing the ease of creating specific solutions with AI assistance.

The Year of the Snitch [32:39]

Model "snitching," where models reveal information about their training data or internal processes, has become a notable phenomenon. Benchmarks measuring model snitching are now referenced in various contexts, highlighting the increasing awareness and research in this area.

The Year of Vibe Coding [33:38]

"Vibe coding," where developers rely on AI to generate code without thoroughly reviewing it, has evolved. The term has been appropriated for using AI to code at all, even with code review. As models and tools improve, developers are increasingly engaging in traditional vibe coding for one-off changes and side projects. "Vibe engineering" is a term for professional engineers using AI assistance to build production-grade software.

The Only Year for MCP [38:55]

The Model Context Protocol (MCP), an open standard for integrating tool calls with LLMs, saw a surge in popularity in early 2025. However, its adoption may have been driven by the timing of its release coinciding with models becoming better at tool calling. The rise of coding agents and the effectiveness of shell commands have diminished the need for MCP.

The Year of Programming on My Phone [41:38]

Developers are increasingly writing code from their phones, leveraging vibe coding and tools like Claude Code on the web. The capabilities of models like Opus 4.5 have enabled more complex coding tasks to be performed on mobile devices. The availability of conformance suites, which provide test benches for models, has improved the ability of models to fix errors.

The Year of Slop [44:13]

The term "slop" has gained popularity, reflecting the increasing amount of low-quality or unnecessary content generated by AI. Public opinion has shifted against data centers due to environmental concerns. Simon Willison's favorite words of the year include "vibe coding," "vibe engineering," "context rot," and "slop squatting."