TLDR;

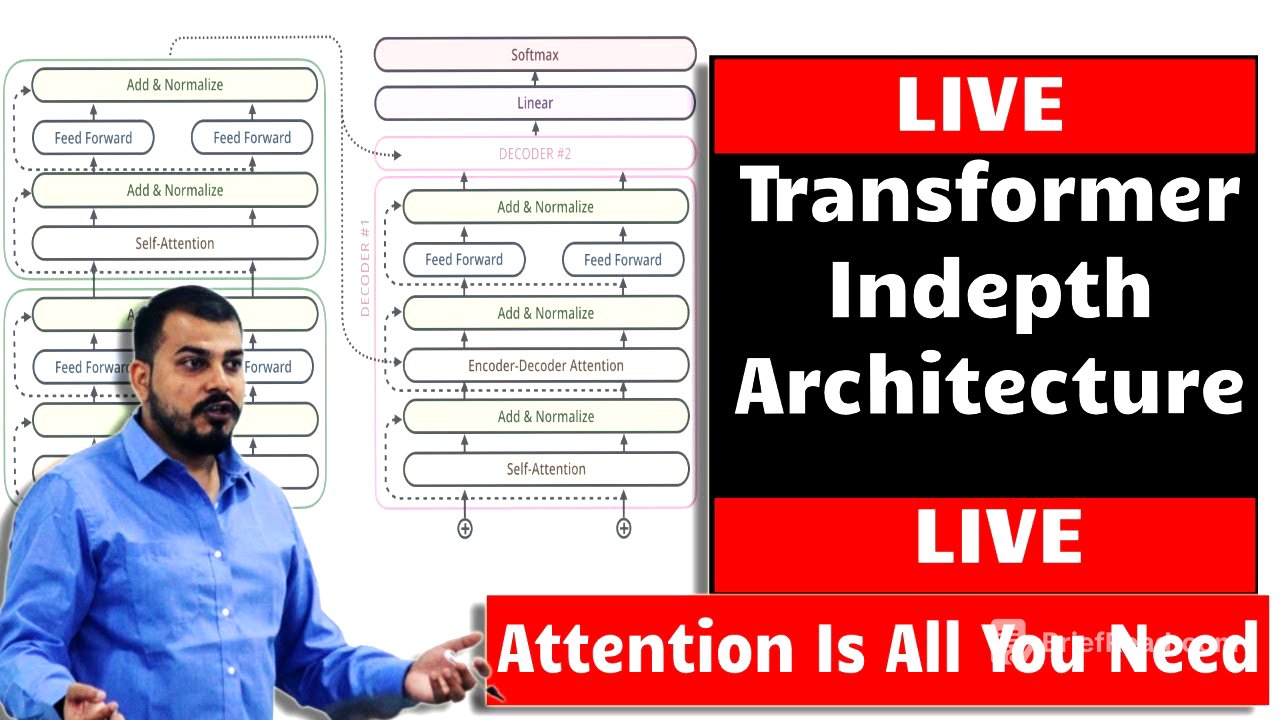

Alright guys, in this session, we're diving deep into Transformers, understanding the architecture and intuition behind "Attention is All You Need." Big shoutout to Jay Alamar for his amazing blog that makes understanding Transformers way easier. We'll cover encoders, decoders, self-attention, multi-head attention, and positional encoding.

- Transformers convert one language to another.

- Encoders and decoders are key components.

- Self-attention helps understand word relationships.

- Positional encoding maintains word order.

Introduction to Transformers [1:38]

So, we're starting with Transformers, inspired by the "Attention is All You Need" research paper. It took a while to understand this, and we'll also cover BERT later. This session is about understanding the architecture, and we'll look at practical implementations with Hugging Face in another session. Big respect to the researchers who've done amazing work on this.

The "Attention is All You Need" Paper and Jay Alamar's Blog [3:39]

The "Attention is All You Need" paper was a collaboration with Google Brain and University of Toronto. The architecture can be tough to grasp at first glance. Jay Alamar's blog is super helpful, explaining Transformers in an animated way. We'll use his blog to understand Transformers and check out his YouTube channel for more content.

Transformers as a Blackbox [9:17]

Think of a Transformer as a blackbox model that converts sentences from one language to another. Inside, it has encoders and decoders. Encoders take an input (like a French sentence) and produce an output. Decoders then convert this output into the desired language (like English).

Inside Encoders and Decoders [12:10]

Inside, there are multiple encoders stacked together. The research paper mentions using six encoders for good results, but you can change this number. The input goes through these encoders, and the final encoder's output is fed to the decoders.

Self-Attention and Feed Forward Neural Network [16:04]

Each encoder has two main parts: self-attention and a feed-forward neural network. Self-attention is derived from the "Attention is All You Need" paper and plays a crucial role.

Input to the Encoder: Embedding Layer [17:41]

The input text is converted into dimensions using an embedding layer (like Word2Vec). Each word becomes a vector of a specific size (e.g., 512 dimensions). The research paper used Word2Vec for this conversion. All inputs are given at the same time, unlike RNNs.

Self-Attention Explained [23:37]

Self-attention helps the model understand which words in a sentence are most related to each other. For example, in the sentence "The animal did not cross the street because it was too tired," self-attention helps the model determine whether "it" refers to the animal or the street.

Self-Attention in Detail: Creating Queries, Keys, and Values [27:44]

First, create three weights (WQ, WK, WV) that are randomly initialized. Then, multiply the input vectors (X1, X2) by these weights to get queries (Q1, Q2), keys (K1, K2), and values (V1, V2). The dimensions are reduced from 512 to 64 in this step.

Calculating Scores and Applying Softmax [33:00]

For each word, multiply the query with all the keys to get a score. Then, divide the score by the square root of the key dimension (DK), which is 8 in this case. Apply a softmax activation function to get probabilities. Softmax helps find the most important words based on probability.

Final Steps in Self-Attention [42:37]

Multiply the softmax value by the value vectors (V1, V2) to get different vectors. Add these vectors to get Z1. This Z1 is the output of the self-attention layer. All these steps can be summarized in four main steps: input, QKV creation, score calculation, and final Z value calculation.

Multi-Head Attention [49:33]

Instead of using a single set of weights (single-head attention), use multiple sets of weights (multi-head attention). This helps the model capture different relationships between words. The research paper uses eight heads. Combine all the Z values from each head and multiply by another matrix to get the final Z value.

Importance of Multi-Head Attention [57:23]

Multi-head attention allows the model to focus on different aspects of the sentence. For example, one head might focus on the relationship between "it" and "animal," while another focuses on the relationship between "it" and "tired."

Positional Encoding [59:13]

Positional encoding helps the model understand the order of words in a sentence. Add positional encoding vectors to the input embeddings before feeding them to the encoder. This helps the model understand the distance between words.

Residual Connections and Layer Normalization [1:02:57]

Each sub-layer in the encoder has a residual connection and layer normalization. Residual connections allow skipping layers if they're not important. Layer normalization helps normalize the output.

Decoders: Key Differences [1:07:28]

Decoders also have self-attention and feed-forward neural networks, but they include an encoder-decoder attention layer. The output of the encoder is passed to this layer. The decoder generates output one word at a time, unlike the encoder, which processes all inputs in parallel.

Decoder Output and Sequential Generation [1:11:11]

The output from the decoder is fed back as input to generate the next word. This process continues until the end-of-statement token is generated. The blog by Jay Alamar is highly recommended for understanding these concepts.

Final Thoughts and Shoutout to Jay Alamar [1:15:11]

Transformers work well and are state-of-the-art. Big thanks to Jay Alamar for his amazing blog. In the next session, we'll discuss BERT. Please subscribe and share this video.