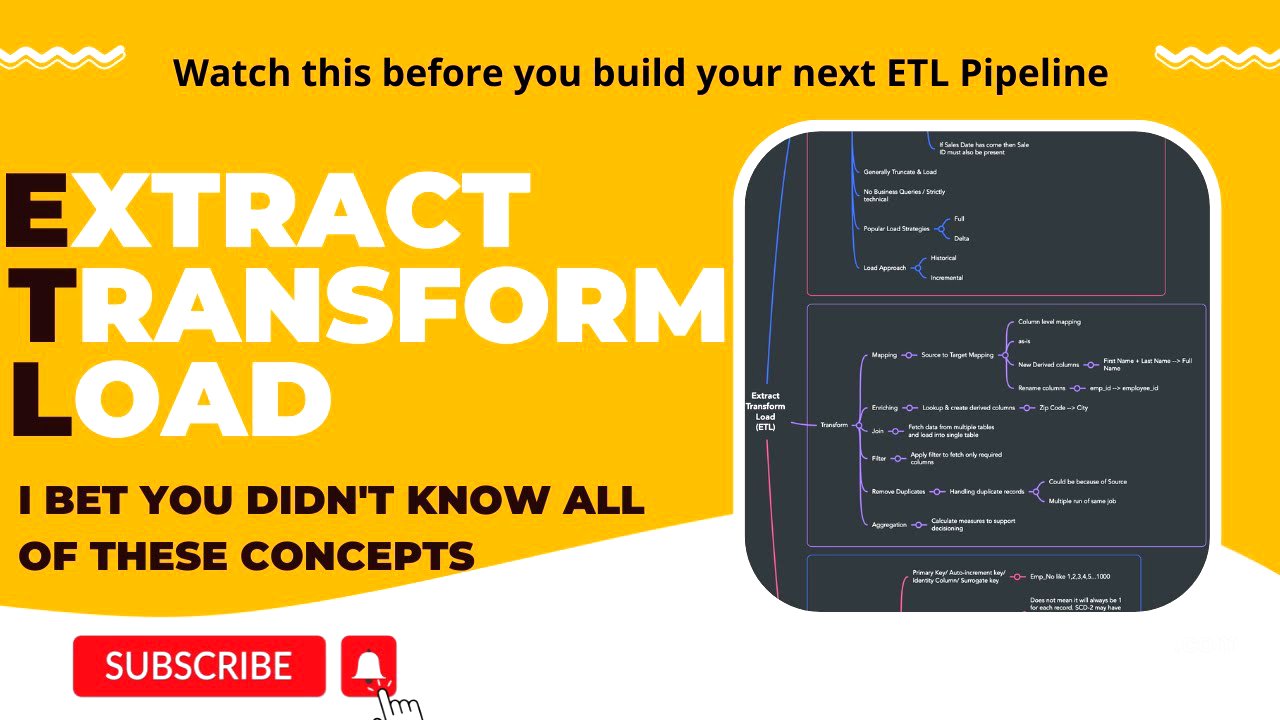

TLDR;

This video gives a rundown of the ETL process, which is extract, transform, and load, important for building data warehouses. It covers key points for each stage, from getting data from various sources to loading it into a data warehouse.

- Extracting data from different sources and formats

- Transforming data for consistency and business needs

- Loading data into dimensions, facts, and data marts

Introduction [0:00]

The video is about extract transform load (ETL), a crucial topic for anyone working with data warehouses. It's useful for SQL beginners and experienced professionals alike, covering important aspects of ETL development. The video will cover the important points which everybody must know as part of ETL.

Extract [1:00]

Extracting data means getting it from various sources like databases (OLTP), flat files, or surveys. These sources can send data in different formats. The goal is to get this data as quickly as possible. Common methods include using flat files, connecting via JDBC/ODBC, or receiving files via SFTP to a landing area due to security concerns. Real-time streaming with Kafka or Kinesis is also used for businesses needing immediate data processing, like credit card companies. Depending on the business needs, data can be extracted in real-time or in batches (daily, weekly).

Data Dump from Source [1:05]

The source extracts data from their environment and sends it in flat file formats. If the source is running some typical database solutions as OLTP sources, they may even let you connect via JDBC and ODBC, and then you can connect to those sources and extract the data yourself. In some cases, due to data governance and security, the source may push files via SFTP to a landing area for consumption.

No Complex Logic [4:10]

In the extraction phase, which usually happens in the staging area, complex logic is avoided. Simple calculations, like determining age from a date of birth, can be done, but complex transformations are not suitable at this stage.

Data Format Consistency [4:40]

Data format consistency is important because data comes from multiple sources. For example, a gender column might have "male," "female," and "others" from one source, "M," "F," "O" from another, and "0," "1," "2" from a third. All these need to be standardized to a single format (like M, F, O) in the data warehouse. Similarly, date formats (YYYY-MM-DD, etc.) need to be consistent across all data.

Data Quality Rules [6:07]

Data quality rules can be applied during extraction. For instance, if a business started in 2015, sales data should be from that year onwards. Data not meeting this rule can be sent to an error table or ignored. Other rules include limiting the length of description columns to save storage or ensuring that if a sales date is present, a sales ID must also be present.

Truncate & Load [7:48]

Staging tables typically use a truncate and load approach. Each day, the table is emptied, and the new data is loaded. This data is then processed. The next day, the table is completely cleared again, and the fresh data is loaded.

No Business Queries [8:10]

No business queries should run in the extraction phase. The staging tables are strictly for technical purposes to support ETL pipelines. The business team should not even have access to the staging area.

Load Strategies [8:40]

Popular load strategies include full and delta loads. For small tables (hundreds or thousands of rows), a full load is used, where the entire table is replaced each time. For larger tables, a delta load is better, where only the changes (updates, deletes, new records) are loaded. The source may flag records as insert, delete, or update, making the process easier. If not, the staging table must be compared with the target table to identify changes.

Load Approach [10:03]

The initial load is a historical load, bringing in all data to date. After that, incremental loads are run daily or at whatever frequency is needed.

Transform [10:40]

In the transform phase, data ingested into staging tables undergoes various transformations and mapping rules to make it more meaningful. Raw data is converted into meaningful information through predefined steps, resulting in enriched data.

Mapping [11:33]

Mapping involves source-to-target column mapping. For every incoming column, there's a corresponding mapping in the target table. This can be an as-is mapping (data moved without changes) or a column-level mapping. New derived columns can also be created, like combining first and last names into a full name. Columns can also be renamed, such as changing "emp_id" to "employee_id."

Enriching [12:33]

Enriching data means adding more information to make it more meaningful. For example, if the source sends a zip code, a lookup can be done to add the city name to the data.

Joins [13:17]

Joins involve combining data from multiple tables into a single table. This is useful when the source sends data in separate tables, but it makes more sense to combine it for reporting purposes.

Filter [13:49]

Filtering transformation involves selecting specific data based on certain criteria. For example, if the source sends global data, but you only need data for North America, you can filter the incoming data to include only North American data.

Remove Duplicates [14:35]

Removing duplicates is a crucial step. Duplicates can occur if the source sends the same file again or if the same job is run multiple times. ETL pipelines should be designed to handle duplicate records to avoid data quality issues.

Aggregation [15:54]

Aggregation involves calculating measures to support business needs. This is similar to using a GROUP BY clause in SQL to calculate sums, averages, max, and min values, making the data more meaningful.

Load [16:46]

In the load phase, data is loaded into dimension tables, fact tables, EDW (Enterprise Data Warehouse) tables, and data marts. Intermediate tables may be temporary, directly loading dimensions and facts.

Dimensions [17:39]

Dimension tables should have a primary key, often an auto-increment column or surrogate key, to ensure uniqueness. They must also have a functional identifier or natural key. Attributes provide information about the dimension, such as employee name, employee ID, and date of birth for an employee dimension table. Load strategies include SCD1, SCD2, SCD3, or hybrid models. Granularity must be properly defined to ensure accurate reporting.

Facts [20:47]

Fact tables have a primary key (surrogate key) and foreign keys, which are the primary keys from the dimension tables. Dimension tables are loaded first, and fact tables reference them. Fact tables contain measures like total sales and total revenue, calculated using aggregation functions.

EDW Tables [22:10]

EDW tables are the main business layer, storing processed data crucial for business decisions. These tables are exposed to the business, reporting, or BI teams. EDW supports BI and shares processed data with downstream applications, acting as a source for other teams' ETL pipelines.

Data Marts [23:17]

Data marts are subject-specific areas derived from the EDW for focused analysis. They contain separate tables with subject-specific data and are used for reporting and visualization.